MySQL Bits - Large MySQL Database Backup

Posted 17 Years Ago

The Problem

One of the sites I own (http://www.writerscafe.org/) has managed to attrace a good number of users and user created content. This, of course, is a grand old thing and what we all strive for. But when you're site starts getting large new and more complex problems arise which don't have such easy solutions.One such problem I faced was backing up a large database (almost 9GB). I tried the basic methods first (MySQL Dump, GUI MySQL Backup Programs, MySQL Hot Backup, etc.), but none of them did exactly what I wanted. What's more, all of them inevitably locked up important MySQL tables for an unacceptable period of time. For example, the table which stored all the private messages has almost 4 million rows and weighs in at a good 5.8 GB.

The Solution

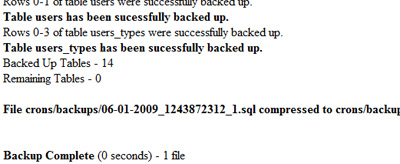

The solution I came up with was to write a script which loops through the database, backing up rows in chunks, then saving them to a file, and looping through again. This meant that instead SELECTing all 4 million rows at once, I was only grabbing 10,000, which is a much shorter query to run.It may also breaks the backup into several files. After playing around with the script a little, I discovered that you could not create a backup file that's over 2GB. The reason is that PHP's fopen function can't handle more than 2GB (unless you do some server stuff which isn't worth the trouble). So the script will also (in theory) break up the backup into files of 2GB (or whatever size you set) if the database exceeds that size.

Then, when it's done creating the backup up, it creates nice little .gz files so it's easier to download.

I've named this little script MySQL Bits because it backs up the database in bits (I was going to go with 'Chunks' but the name didn't sound quite so appealing). Below is a link to the backup.php file which handles the backup:

Basic Configuration

The script should be all ready to go. The only thing you HAVE to edit is the MySQL database connection and the folder you'd like to save the backup(s) to. Here's what that code looks like:// MySQL Variables

define('MY_S','localhost'); // Server (usually 'localhost')

define('MY_D','charles_website'); // Database Name

define('MY_U','database_username'); // Username

define('MY_P','database_password'); // Password

// Relative Folder to Save To

$path = "backups/";

define('MY_S','localhost'); // Server (usually 'localhost')

define('MY_D','charles_website'); // Database Name

define('MY_U','database_username'); // Username

define('MY_P','database_password'); // Password

// Relative Folder to Save To

$path = "backups/";

Should be simple enough (I 'defined' my MySQL variables because it makes them easier to use in functions). The $path variale is a relative path to the backup script. In the example script I simply created a backups/ folder and saved the backups there. Note: you must change the permissons on this folder to 0777.

Advanced Configuration

Of course, I know we all like to have control over our scripts so there are a few other options you can play around with:|

$pp |

Rows Per Loop This is the number of rows each loop with select from the database before updating the .sql file. If a table has less than the $pp value it will continue onto the next table until is reaches $pp. If a table has more than the $pp value, it will select that many rows, add it to the .sql file, then continue on where it left off. Default = 10000 |

| $max_file_size | Max File Size (in bytes) As I mentioned, PHP's fopen function can't handle more than 2GB so I've set the default (2000000000 bytes) to slightly below 2GB (2147483647 bytes). You can change this if you want to be able to download smaller backup files. Default = 2000000000 |

| $name | File Name The fomat of the Backup's filename. I recommend using some sort of date format. You should NOT include .sql in the file name. Note: the filename is appended with _x.sql to accomodate backups which are split into multiple files. Default = MM-DD-YYYY_Timestamp |

| $life | Backup Life (in days) This is the number of days you want to keep this backup on your server. The backup will be deleted after this many days. The default is 30 so, if you we're running a weekly backup you'd have approximately 4 backups on your server at all times. Default = 30 |

| max_execution_time / mysql.connect_timeout / set_time_limit | PHP Timeout (in seconds) We don't want our script running out before it's completed (my backups can take a couple of hours) so we up the server's timeout settings. Note: these may not work on all servers because of your permissions. Default = 7200 (2 days) |

The Limitations

There are a few problems I've encountered with the script. I'm going to keep working on it, and feel free to offer advice.2GB Limit

The multi-file part of the script works, I've tested it with a max_file_size of 50000000 (about 47 MB) and it works fine. However, whenever it's backed up about 2GB worth of data (into one or many files) it craps out. This is because the fopen() function can't handle more than 2 GB.

I've worked around it by setting up a cron to run daily. On Sunday it starts to backup (storing the progress in the db) and each day after that it picks up where it crapped out the day before.

SELECTing End of Large Tables

When selecting the ends of a large table (SELECT * FROM .... ORDER BY LIMIT 10000000,10000) it runs rather slowly. The script uses the Primary Key to SELECT rows where it can, but that's also slow (SELECT * FROM .... WHERE primary_key > 10000000 AND primary_key <= 10010000 ORDER BY primary_key ASC).

I think this is more of a MySQL / Server bottleneck issue and I haven't found anyone who has a possible solution except tweaking the server's my.cnf file.